Biomimicry is a fascinating approach to innovation—and its source is right outside your front door. Biomimicry is really nothing more than the process of emulating with technology the many tricks of nature. And nature, of course, is an astounding innovator. Consequently, we can discover many solutions to human engineering challenges simply by copying nature’s systems and strategies.

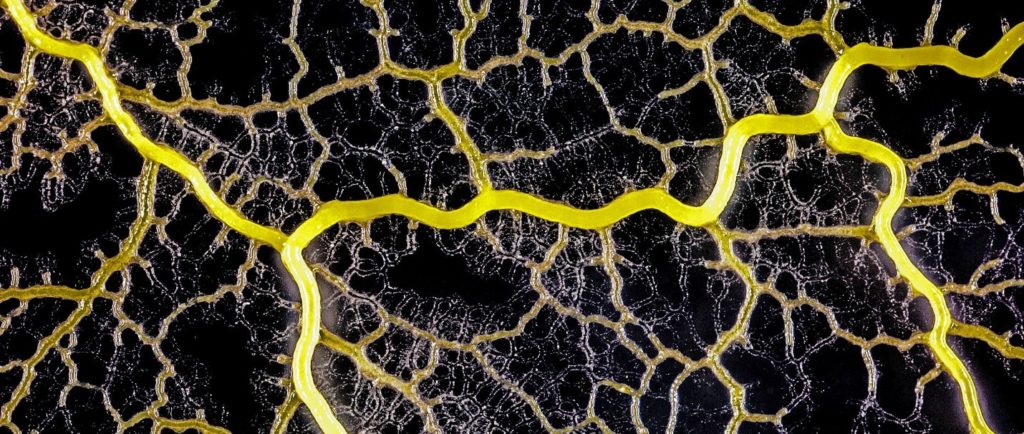

As Steve Jobs observed, “. . . the biggest innovations of the 21st century will be at the intersection of biology and technology.” It’s so true. Take, for example, photosynthesis. We discussed at Technica Curiosa how man-made catalysts are mimicking the action of plants to convert sunlight into energy, yielding artificial photosynthesis—a clean and potentially inexhaustible source of energy. The lowly slime mold, to cite another, has devised methods that could revolutionize network design. It turns out that slime mold is an exceptionally efficient forager: it creates extensive networks between food sources, constantly pruning connections, maintaining only the most productive pathways. What’s more, it is both resilient and fault-tolerant. How might its methods be copied to improve graph analytics? Or think of the eye, and the many ways it is represented in nature to serve very specific survival needs across species.

The slime mold Physarum polycephalum forms a network of cytoplasmic veins as it spreads across a surface in search of food.

This our point of departure—innovations in vision systems. So let me open your eyes now to some amazing new developments in optics—inspired by nature—that could have tremendous impact in how we build out the burgeoning sensor-driven economy.

As we pointed out in Moonshots, even though autonomous cars have logged millions of miles of accident-free driving, whenever one suffers so much as a fender-bender, it becomes front page news. As you might recall, one of the biggest of such stories involved 40-year-old Joshua Brown, a former Navy Seal, whose Tesla Model S slammed into a semi-truck on the road while Brown’s car was on autopilot. It turns out that the autopilot technology failed to actuate the brake because it didn’t see the truck; the color and light intensity of the tractor trailer it crashed into matched that of the background sky—the camera system actually mistook the tractor-trailer for empty sky! And Brown died as a result.

For a surprising solution to this problem, we’ll have to wade into shallow subtropical waters. But before we do, it will be helpful to understand that anything having to do with optics—camera systems, for example—is necessarily concerned with light. Light, such as that emitted by the sun or indoor lamps, vibrates in a variety of directions and in more than one plane. It is “unpolarized.” But here’s the big idea: it is possible to transform unpolarized light into polarized light, which are light waves that vibrate and propagate in a single plane. And the process of this transformation is called, you guessed it, polarization. Why does this matter? Well, if you happen to be a photographer, it’s likely that you sometimes attach a polarizing filter to your camera’s lens. It improves lighting effects, for example, by darkening the sky and increasing contrast. Now guess what? The light polarization of a tractor-trailer is different than that of the sky. See where this is going?

Now, while nature did not bless the human eye with the ability to distinguish the polarization of light, many animals are so endowed. Simply stated, had Tesla’s vision system been powered by a Mantis shrimp, it would have easily discerned the difference between the trailer and the sky.

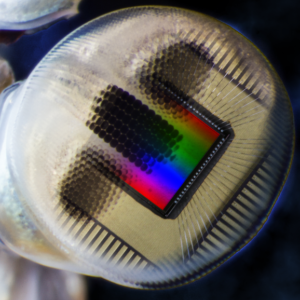

The amazing vision system of the Mantis shrimp—a key ingredient in unimagined moonshots.

Indeed, the colorful mantis shrimp sports one of the most complex vision systems to be found anywhere in the animal kingdom. It is able to detect 16 spectral channels and four linear and two circular polarization channels, equipping it with an astounding range of imaging capabilities (the mantis shrimp eye also contains 12 color receptors, while humans have just three). It’s no surprise, then, that this creature’s visual system is a fantastic model for designing more robust imaging technologies. To this end, of particular note is the work of researchers at University of Illinois at Urbana-Champaign and Washington University in St. Louis.

Because the eyes of Mantis shrimp allow it to distinguish between wavelengths of about 1 to 5 nanometers, they have a logarithmic response to the intensity of light. Inspired by this, the researchers adjusted the way the photodiodes of the camera converts light into an electrical current, yielding a response similar to that of the shrimp. They also mimicked the way the shrimp integrates polarized light detection into the camera’s photoreceptors with the help of nanomaterials deposited into their imaging chip. These nanomaterials essentially act as polarization filters at the pixel level to detect polarization in the same way that the Mantis shrimp sees polarization.

By mimicking the eye of the mantis shrimp, Illinois researchers have developed an ultra-sensitive camera capable of sensing both color and polarization. The bioinspired imager can solve imaging challenges for self-driving cars, and potentially improve early cancer detection.

And it works. The nature-inspired camera system was able to operate reliably in bright light or shadow, in tunnels and under foggy conditions. What’s more, it’s both small and cheap, with fabrication cost of less than $10, meaning the technology will soon find its way into myriad automotive and other remote sensing applications. But get this: this new nature-inspired approach to imaging may also help detect cancerous cells in the body by analyzing the difference in light polarization between cancerous cells and normal tissues. Pretty cool.

For more on this, see the paper here.